Would VR benefit from ray tracing? Can you imagine immersing yourself in a virtual world that is indistinguishable from reality? How hard is it render stuff in VR anyway? Well, VR headsets come with 2 displays, one for each eye, they have pretty high resolutions that can go up to 3840x2160 (that is a PiMAX 8k) and if that's not enough, the refresh rate is 90hz. So huge frames at crazy high refresh rates, all ray traced, terrible situation. Do we even stand a chance?

I believe so, I'll present an idea I've had for a while that leverages ReSTIR[2][3][4] powers and saves on our precious ray budget. It all started when someone pointed me to an existing VR mod for JKII[1] and asked if my port would include VR capabilities, so it's been in the back of my head for a while.

Disclaimer: I currently do not own a VR headset and it's going to take me a while to get to the point where I could realistically start working on implementing VR so what follows is highly theoretical, it works in my head, but I wanted to share this idea as it can help someone, or might open the discussion to get to an actual good solution. There is nothing really new here per say, just an application of already known stuff.

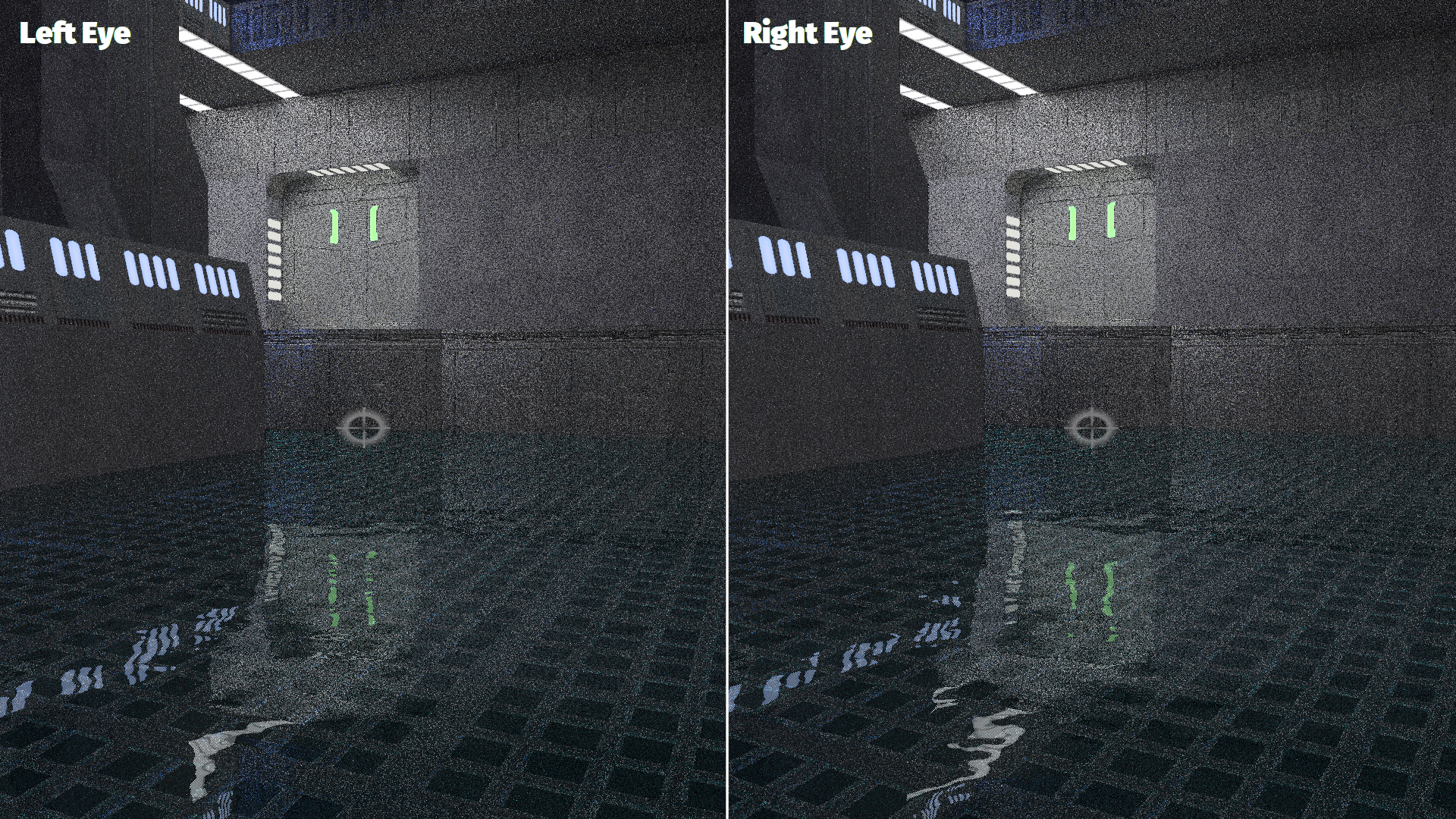

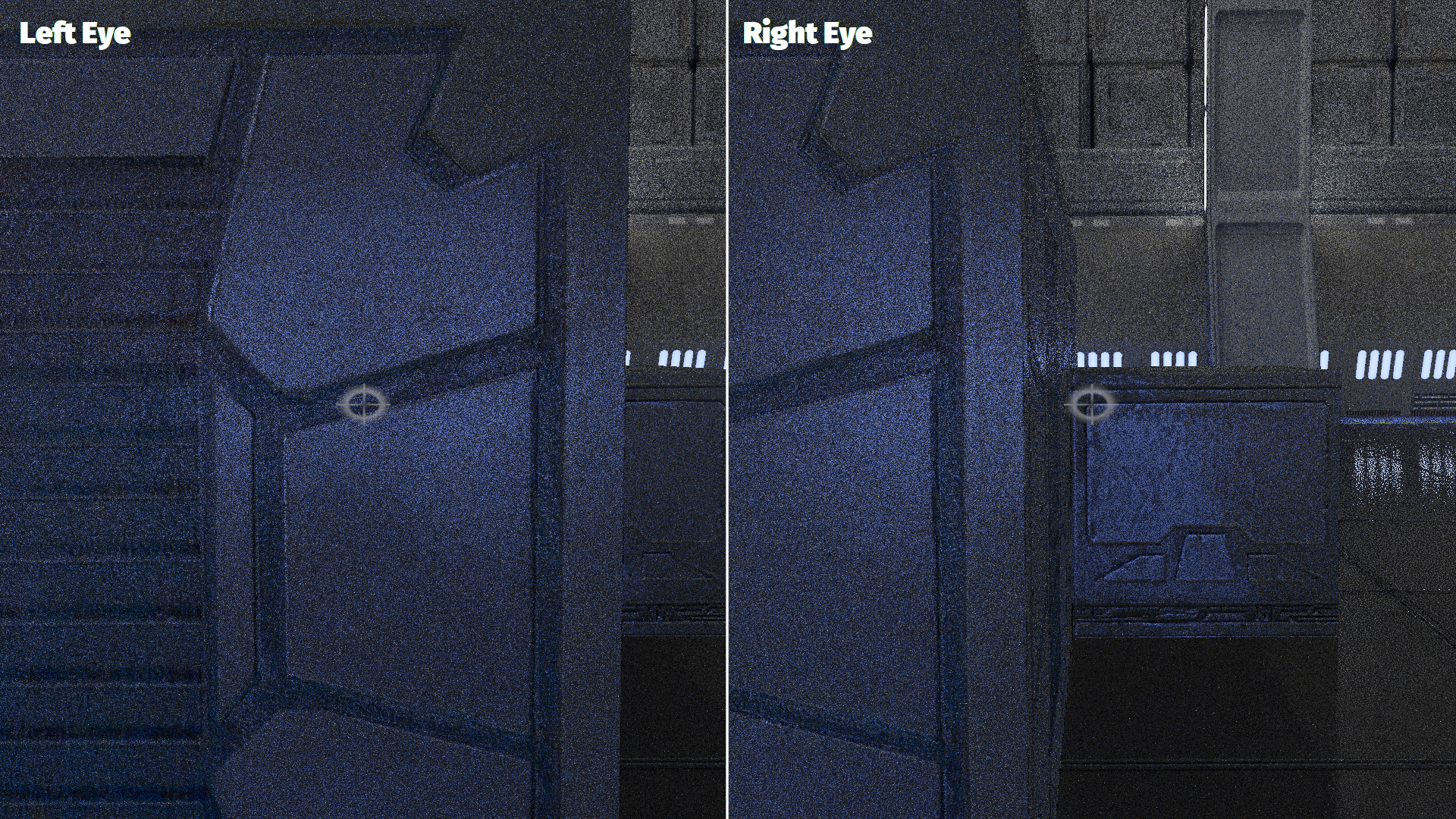

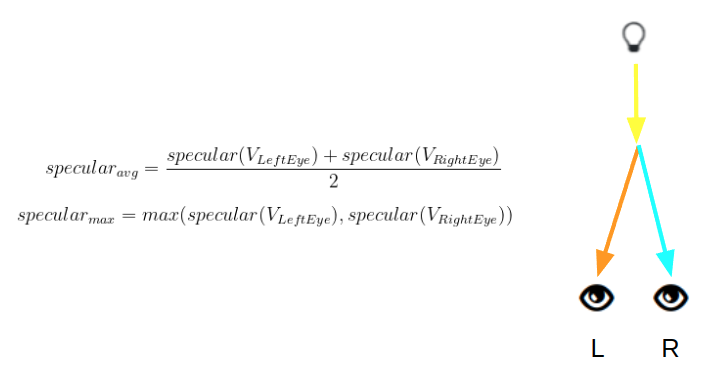

Of course the first thing that we need to keep in mind is that we need two cameras in our world, one for each eye and that we need to render a frame for each, of course we could generate each one independently, that would be pretty expensive. Now, check the following image:

As you can see, both images are pretty close to each other, they are almost identical, eyes tend to be close to each other, can we leverage that? Definitely, we can reuse a lot of the work done for one eye on the other.

We'll use the right eye frame as the source and we'll reuse stuff on the left eye from now on, can be done the other way around if you want.

First we can perform a normal ReSTIR pass on our right eye frame and store the resulting reservoirs in a buffer, at this point we are using one shadow ray per pixel. We could use ReSTIR El-Cheapo[7] to further save shadow rays. We also store the resulting radiance of the indirect illumination bounces, super easy for diffuse bounces, trickier for specular bounces, more on that later.

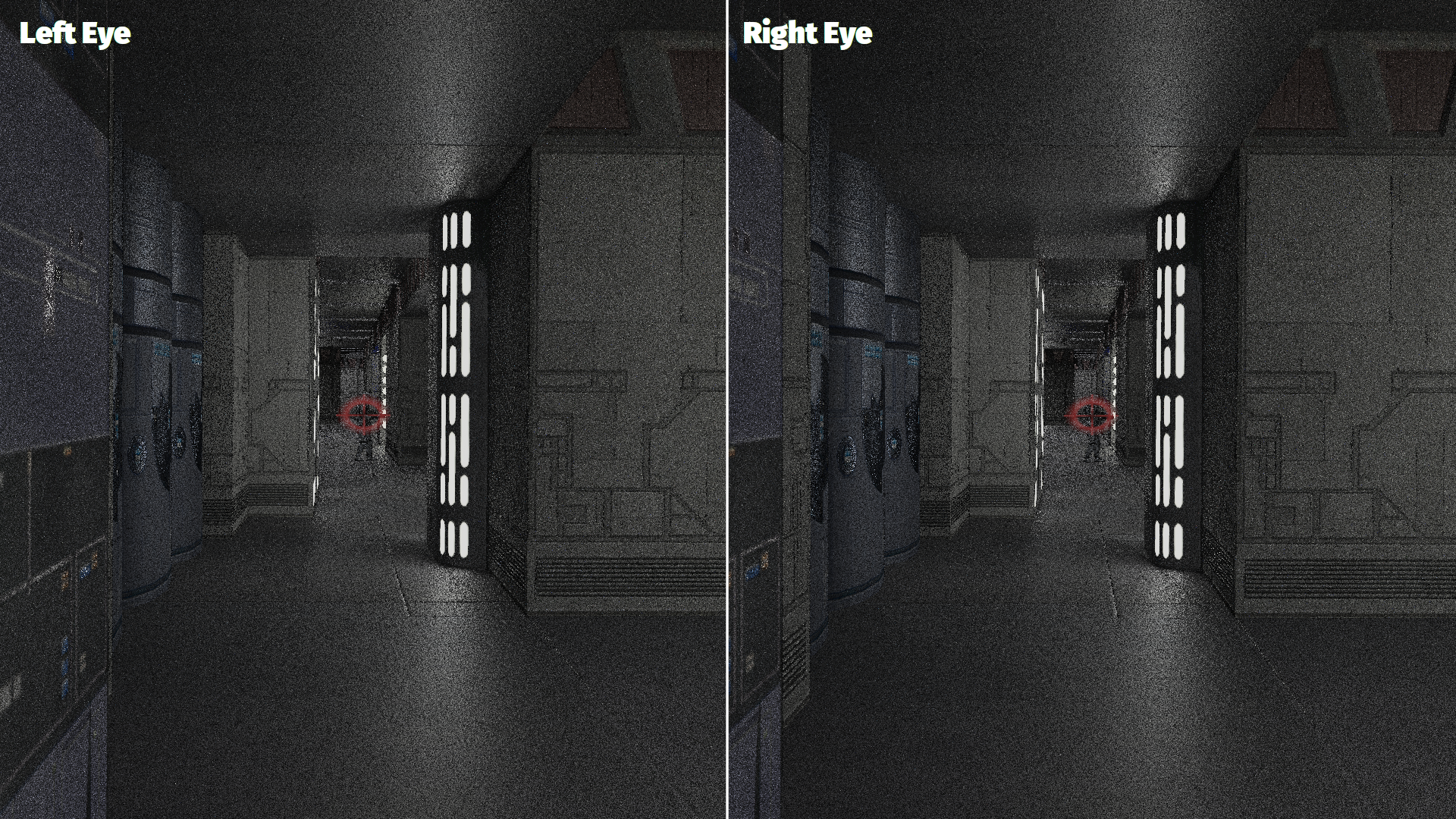

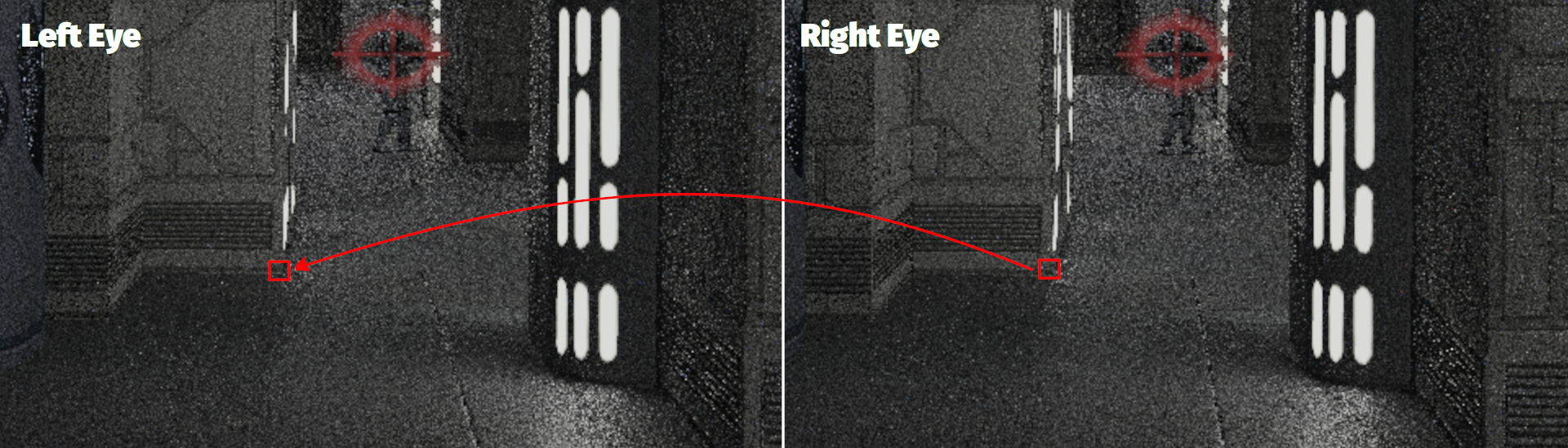

Then what we need is a way to map pixels from the right eye to the left eye, something that tells us that a pixel at some coordinate on the right eye can be found at some other coordinate on the left eye, or not at all if occluded. Since we could consider that we are moving the camera a little to the left, we could just calculate motion vectors from one eye to the other. There are some interesting technologies like MVR[5] that help speeding up view positions calculations, I don't know if they could be used in this particular scenario to compute said motion vectors or if there is something similar that does. Anyway, once we calculate those motion vectors we can for each pixel on the left find the reservoir that was calculated on the right and shade it, no shadow rays or other sampling, just like that, also we can add the contribution of the indirect illumination bounces, for diffuse no tracing needed, for specular bounces, again, things get tricker. It would look something like this:

Alright, it seems we are almost done, but we have some disoccluded pixels, what do we do about those? Full process is needed, we need to keep an extra ReSTIR temporal buffer for the left eye to ensure high quality. In the worst case scenario the left eye is looking straight at a wall while the right eye is looking down a hall, you know, the user peeking around a corner.

Reusing reservoirs has several advantages, for one we are reusing a lot of computation and saving our valuable rays, but another very important advantage has to do with user perception of the scene. I wasn't really aware of this issue until a question was posted at the NVIDIA DEV forums[6], basically if we were to render each eye independently we could end up with slightly different lights being selected and that can ruin perception, and it's even worse with specular stuff, different view vectors for each eye. So light reuse can really help here, but still, if we do all the sampling from the right eye's perspective, specular stuff will look great on the right eye, but not so much on the left eye, we need to do something.

Specular lobe, we meet again....

Let's (attempt to) tackle this specular issue for both direct and indirect lighting.

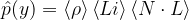

When it comes to Direct Lighting, ReSTIR[2] requires that we establish a function that represents our target function, which can be suboptimal. It could be something like:

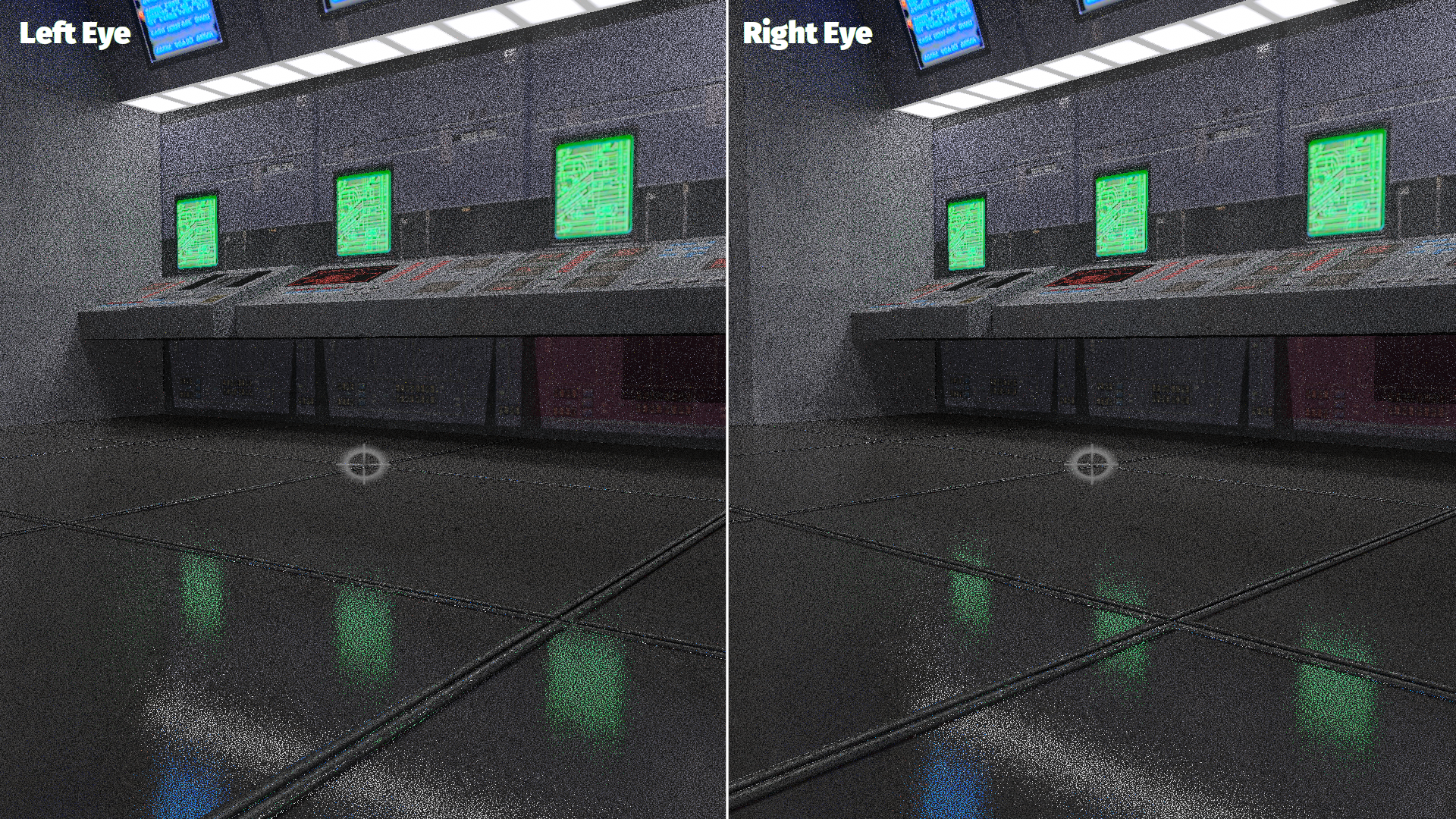

Where ρ is the BRDF, Li is the incoming radiance from our sample, N is the surface Normal and L is the sample direction vector. Inside the BRDF we can have a specular part and a difuse part, since specular is dependent on the view vector, which one should we use? Right eye or left eye? What if we use both? We could calculate an average of both or take the max value of either lobe. In this way we are representing both specular lobes in our p̂(y).

This would give us a better chance of having specular evenly distributed on both eyes, I guess, this needs some serious testing.

What about indirect lighting? Can we reuse a specular sample as we did with diffuse? It could be doable as long as the sample falls within the specular lobe of both eyes, if not, well, we need to generate a new sample. The higher the specularity of a surface the less likely a sample can be reused on the other eye, it depends on specularity and distance to the eyes. I wonder if sampling where both specular lobes intersect would be beneficial in this instance.

All that we've talked about works fine on one card, but since VR usually means two cards in SLI/Crossfire, how do we share stuff? It would be wasteful to have one card render one eye and have the other wait for the resulting reservoirs. It could be done as Q2RTX does, and JK2/3 do, that is, render half of the frame in one card and the other half in the other, that way they can run in parallel, stay independent and not wait for resulting reservoirs or indirect light samples.

Remember that using upscaling tech might be needed, so don't be shy and use DLSS / FSR / XeSS or whatever you have at your disposal.

So here it is, I hope this is feasible and that I can implement this in the future so we can have some ray traced Jedi Outcast/Academy VR goodness.

Footnotes

[1] Jedi Knight II: Jedi Outcast VR

[2] Spatiotemporal reservoir resampling for real-time ray tracing with dynamic direct lighting

[3] Rearchitecting Spatiotemporal Resampling for Production

[4] How to add thousands of lights to your renderer and not die in the process

[5] Turing Multi-View Rendering in VRWorks

[6] Specular flickering with RTXDI in VR

[7] ReSTIR El-Cheapo