How hard is it to correctly estimate indirect lighting? Light tends to bounce around a lot and usually in an offline path tracer, like the one you'd use to render a movie, you can calculate several bounces until no significant contribution is brought in. Anyway, as long as you are not doing real-time path tracing that's fine. However, real-time path tracing can get really tricky, we are constrained by the number of rays per pixel and of course we want high quality samples that are not that noisy. Also high FPS, lots of frames. Basically we want it all and we want it fast. Could we improve the quality of our indirect bounces? Specially from the 2nd bounce on? I think so, but for that let's go through some concepts real quick.

How are paths built? This is a very important and delicate process that of course I'm about to break beyond repair. [1] First of all we shoot a ray from our camera/eye/sensor into our scene, that ray will (hopefully) hit a surface and now all we need to do is compute the radiance that goes back to our camera/eye/sensor, the equation we use for that is:

This is known as the Rendering Equation™[2] Where ω is the hemisphere of directions around the surface normal, ρ is the BRDF, Li is the incoming radiance, N is the surface normal and wi is the light direction vector. This is the kind of integrals we can't solve using our calculus book so we need to use a numerical method, in this particular we will use Monte-Carlo integration

This basically means we need a strategy to get a random sample wj within ω and divide by the PDF of said sample, usually a lobe in the material is selected at random and then that lobe is sampled. That sample tells us the direction of our next ray, we shoot a ray and find another surface, now we need to know the radiance coming from that vertex toward our original vertex, for that we use the following equation:

Yep, the Rendering Equation™ again, which means we Monte-Carlo it, get a new sample, shoot a ray, find another surface aaaaand ... rendering equation again and again and we keep doing this until we get bored or we find a light, in which case we propagate the light back through our path and have a nice beautiful color for our pixel. If no light is found we discard our path and start all over again. Keep generating samples and adding the result to your pixel and after a while you will have a result that converges toward the solution to the Rendering Equation™.

This sounds somewhat slow and wasteful, fortunately there are ways to improve the performance of this algorithm, like Next Event Estimation or better strategies to sample the different material lobes, however since we are dealing with real-time path-tracing, we need to do even better, remember, high FPS. For that we can use ReSTIR GI[3], or ReSTIR PT[4] if you feel adventurous.

Either algorithm is based on Resampled Importance Sampling (RIS). RIS allows us to importance sample a function p̂(y), which can be a simplified version of the Rendering Equation™, using a cheaper function p(y) that is easier to sample.

Using weights like these we can get a RIS estimator of our integral that looks like so[3]:

This is a much better estimator than if we just plugged p(y) to our Monte-Carlo estimator.

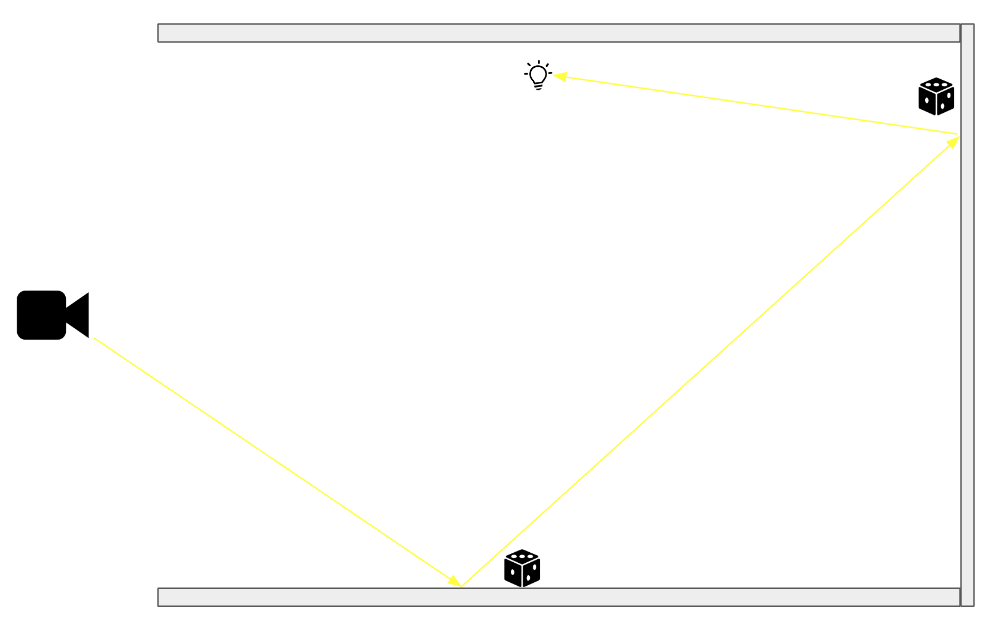

So how would we go about generating a sample? First we shoot a primary ray from our sensor/camera/eye into the scene, once at a surface we can sample a material lobe p(y) which yields a direction wj and using said direction we then build one of the aforementioned paths, we calculate the radiance it brings in and that can be used as our p̂(y) [3]. A very interesting observation is that we can represent a whole path with a RIS weight, this will become handy later on.

Plug that to a reservoir and then resample using other reservoirs both spatially and temporally[3][4]. Of course there are some reconnection conditions that must be met for a neighboring sample to be used, more on reconnection later.

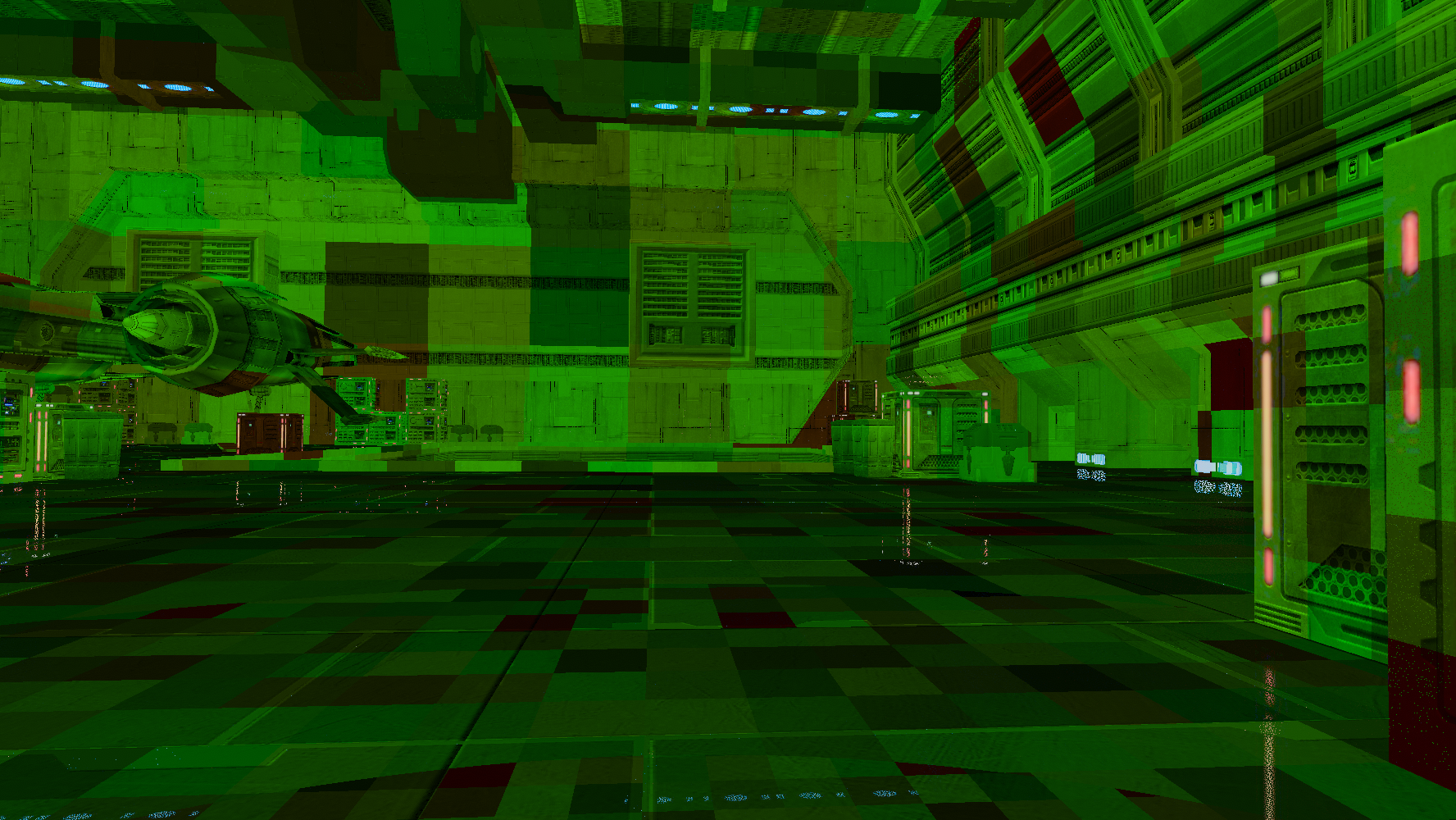

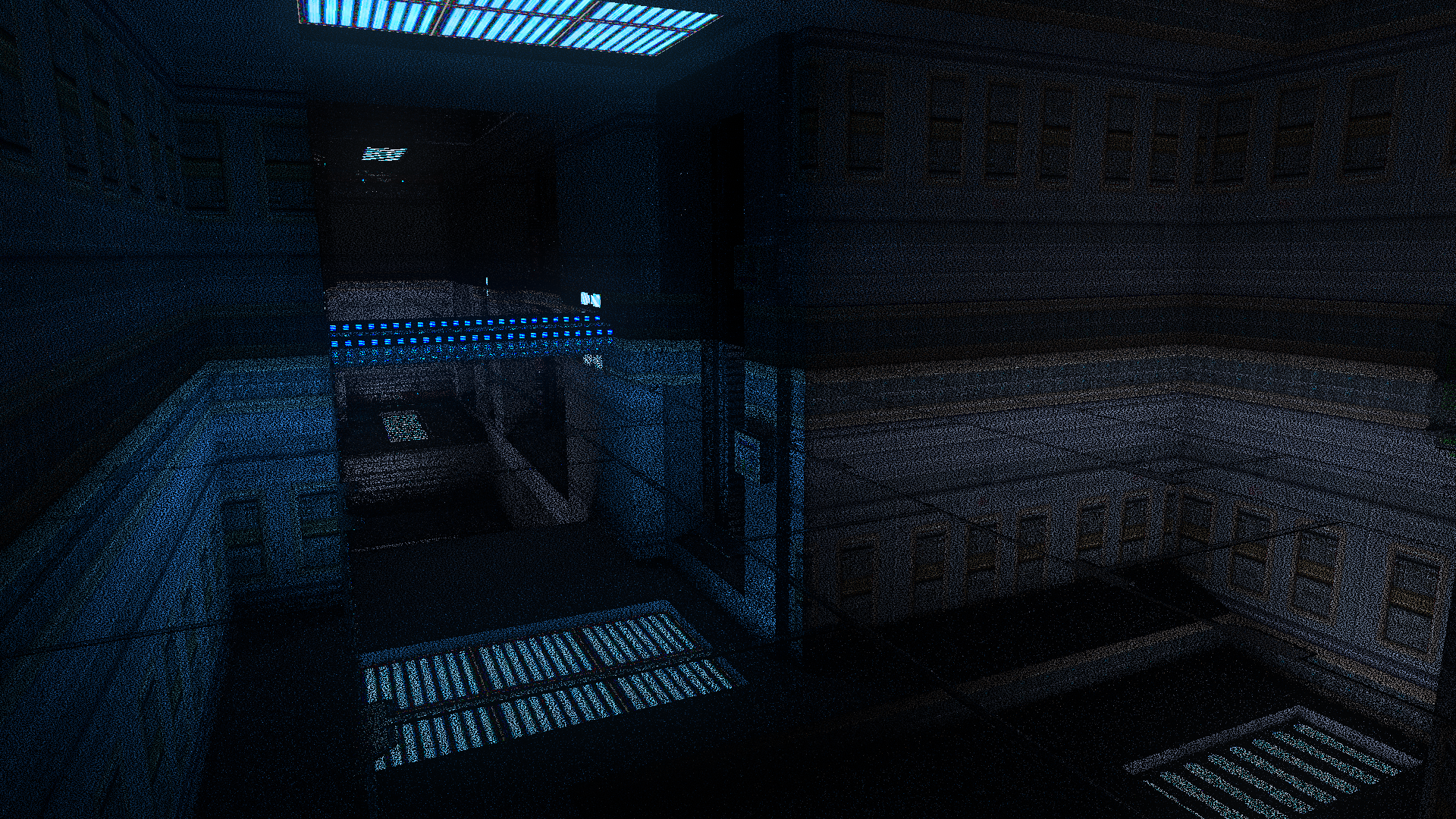

Results can be really good.

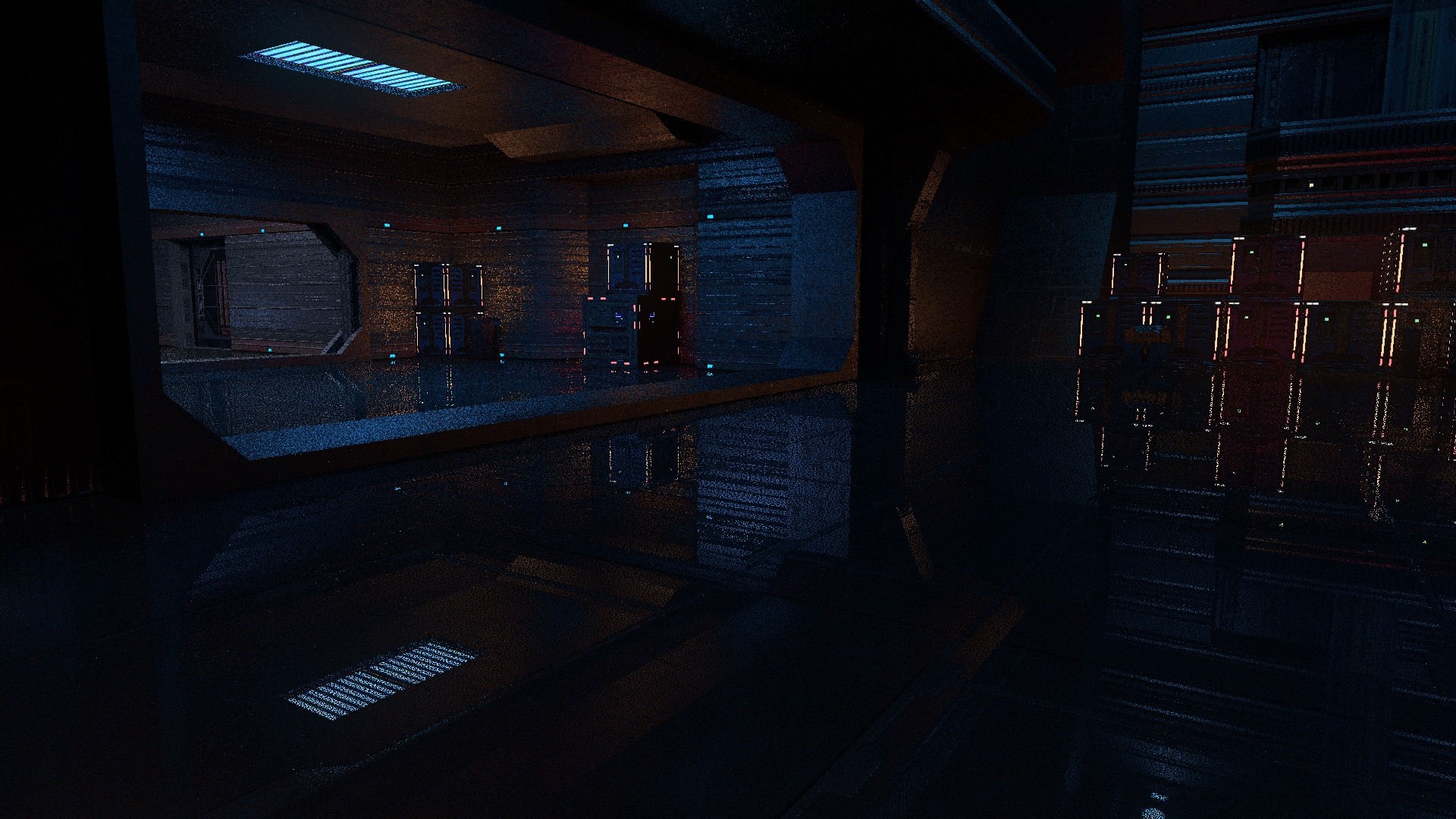

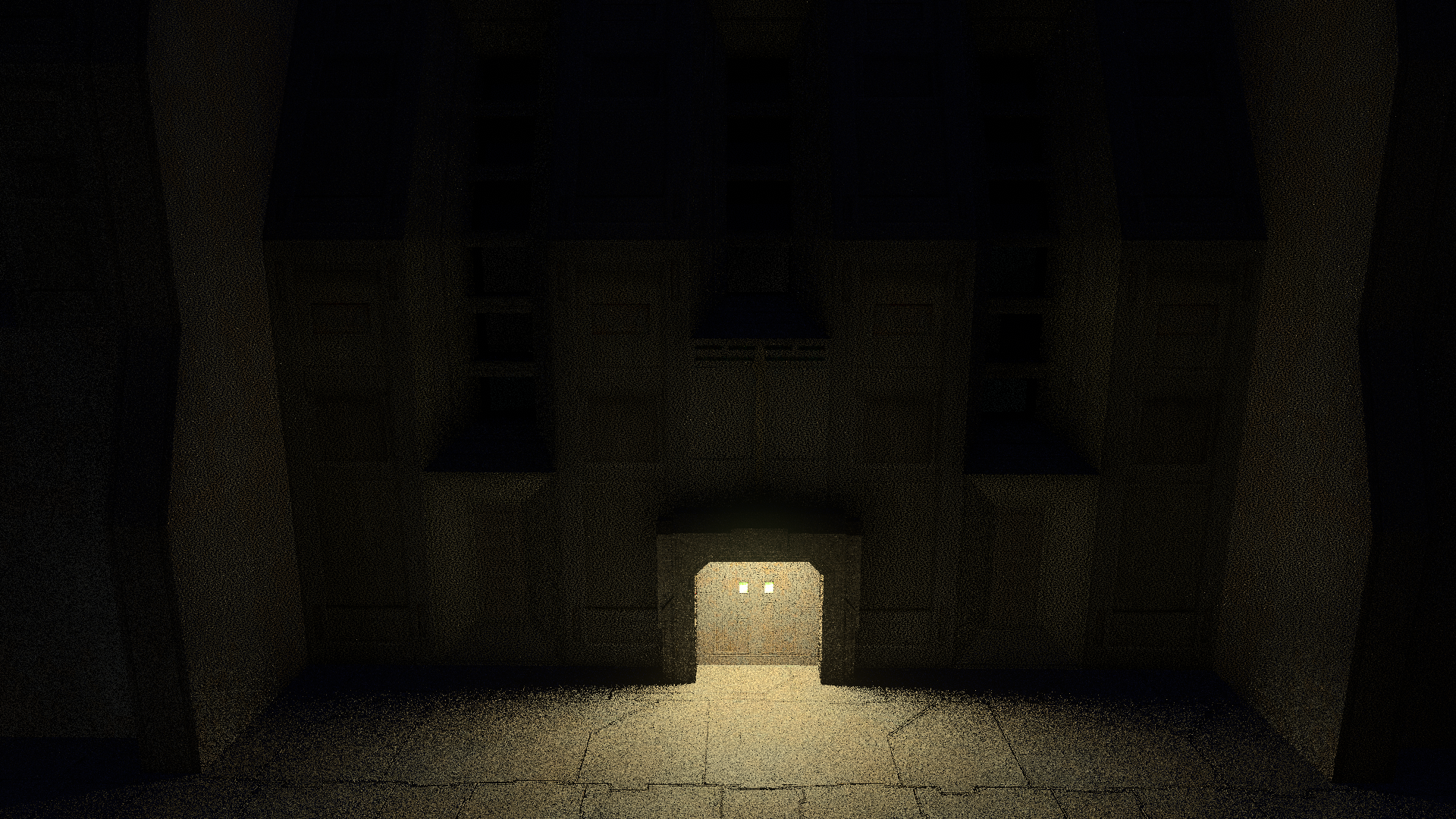

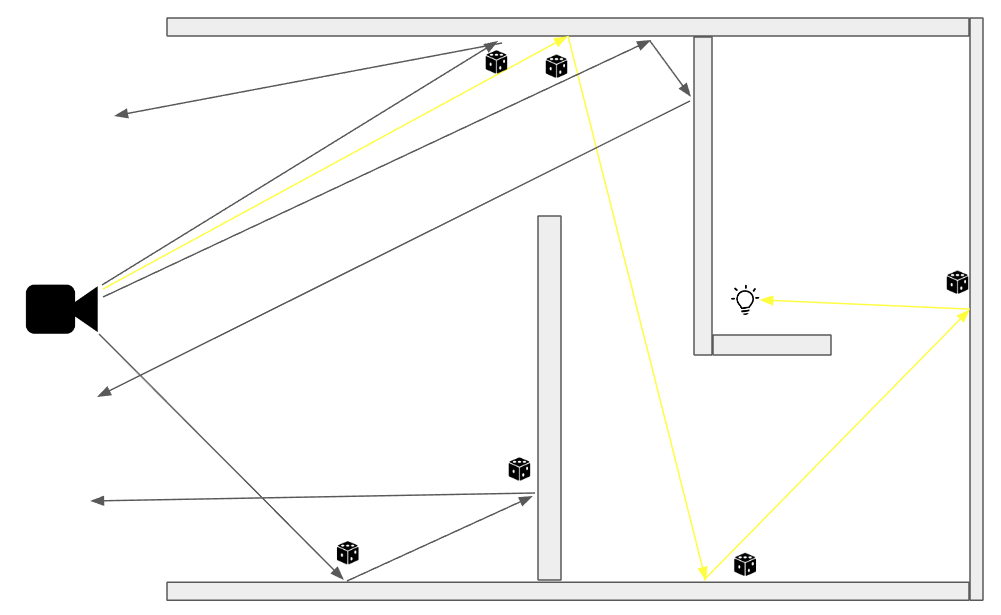

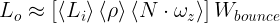

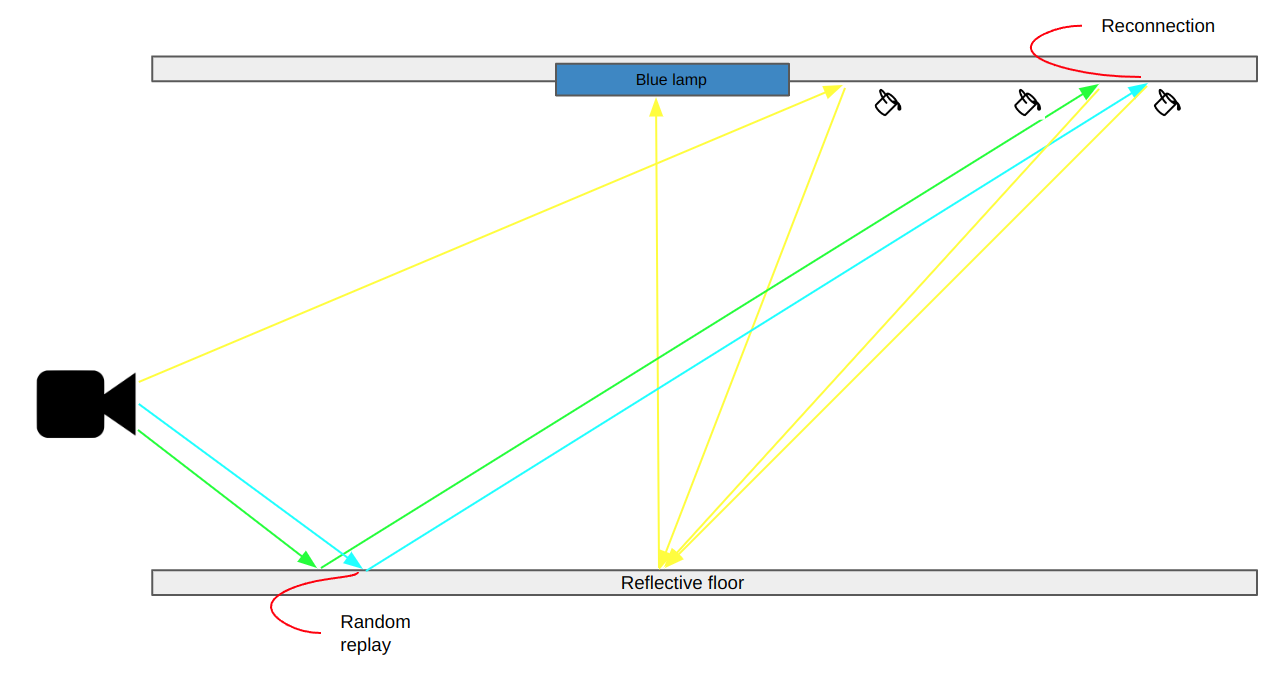

Now let's see the following scene:

In ReSTIR GI what will happen is that one of the pixels will randomly find the correct path, store that in a reservoir (represented by a bucket) and then share it with its neighbors, sharing is caring.

Pixels at the top are happy, but what happens with the pixels at the bottom? What are the odds of those finding the right path? It can take them a while, so can we do something about that?

Remember that each bounce's contribution can be computed using Monte Carlo Integration of the Render Equation which means we could use a RIS estimator. Same thing as we did with the whole path but for a path segment. wj is the direction we cheaply sampled from our BRDF with probability p(y) and p̂(y) can be the luminance that comes from the path back to that vertex.

How is this helpful? Well, if we can use RIS we can use reservoirs! Wait... what? Bear with me, one of the strengths that ReSTIR[7] has is that of spatial resampling, sharing reservoirs among neighboring pixels, this happens on screen space, however that might no be possible when we are talking about world space as rays are bouncing around the scene. For that we got ReGIR[5], ReSTIR's world space cousin, in it we store light samples in a world-space grid and use said grid to get high quality samples that help on NEE.

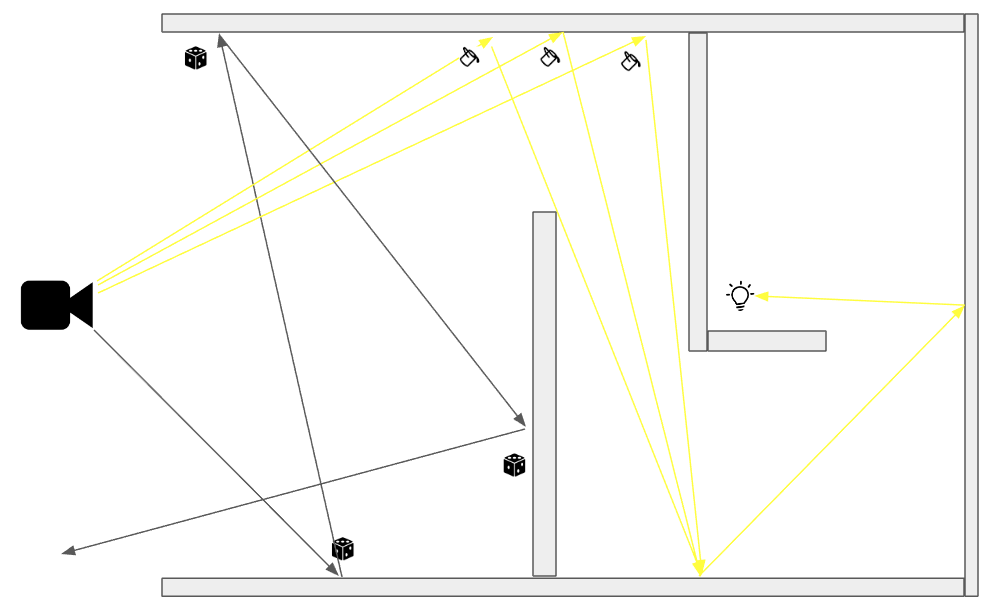

Extending ReGIR's idea, we can store reservoirs that represent paths in a grid, in that way could find a better path faster. Let's call that ReGIR GI from now on. So how would the whole thing work?

First we need to store in a grid paths as we generate them, just make sure they do bring in some radiance and that they are reconnectable, there is no point on sharing sample that cannnot be reused.

There are several opportunities to use our ReGIR GI samples, like when generating a new path we could resample at a particular bounce both what is in the grid and the newly generated path segment using a bounce reservoir. I've found this particularly useful for second bounces. Of course , mind reconnectability(Is this even a word?) On how to combine reservoirs and the equations you need, you can check [3] and [4].

If for some reason the grid did not contain a useful sample, our newly generated one will be selected so it's all good.

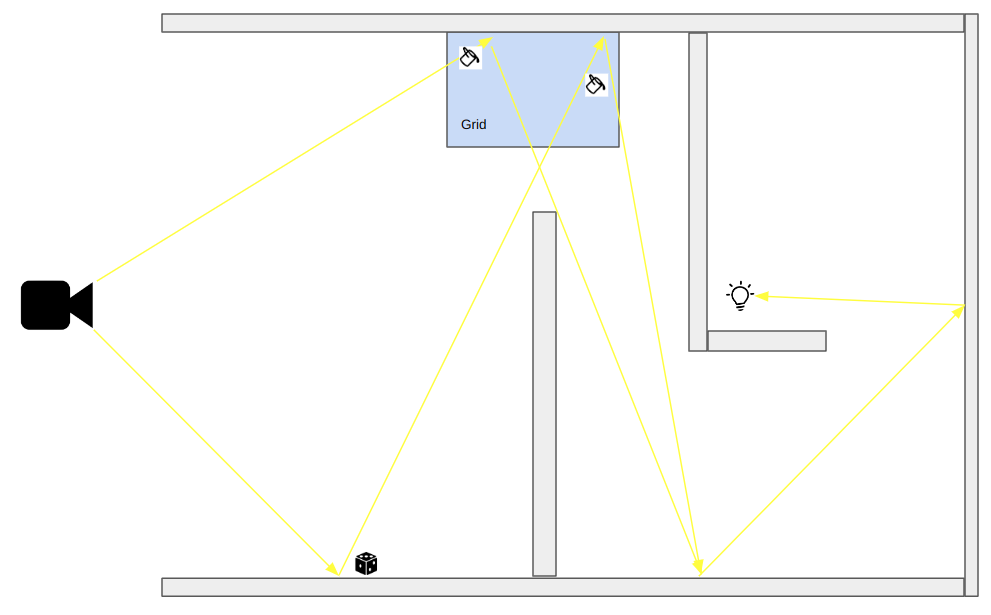

So after all that the bounce contribution can be evaluated using our well known:

Where Wbounce is the Weight of our bounce reservoir. This estimates the rendering equation at the bounce.

Recap:

1) Store reservoirs with paths in the grid as they are generated.

2) When generating a new path, at a bounce vertex, if the current lobe allows for reconnection, we can check the grid to find good known paths and stream the reservoirs in it through a bounce reservoir along with a new sample.

3) Reconnect to the selected path.

So it would look like this:

Once a good path is found it can be stored in a screen-space reservoir and shared normally as we would do with ReSTIR GI/ PT.

Let's talk about reconnection. We need to be able to connect one path to another, for that if we are at vertex xi and we are trying to connect to vertex yi we need to make sure that the direction wi (from xi to yi) is within the lobes we are reconnecting, this works well for rough (20%>) and diffuse materials. (You can always uniform sample the specular lobe, for that kind of non-sense check [6])

But what happens when we are dealing with high specular materials where the lobe is very narrow? Can we do something about them?

ReSTIR PT brings in the idea of random replay and delayed reconnection, this means that when we can't reconnect, we replay the random numbers, which will generate a somewhat similar direction and at the next vertex if we can reconnect, we do so. For in dept discussion of reconnection and random replay, check [4].

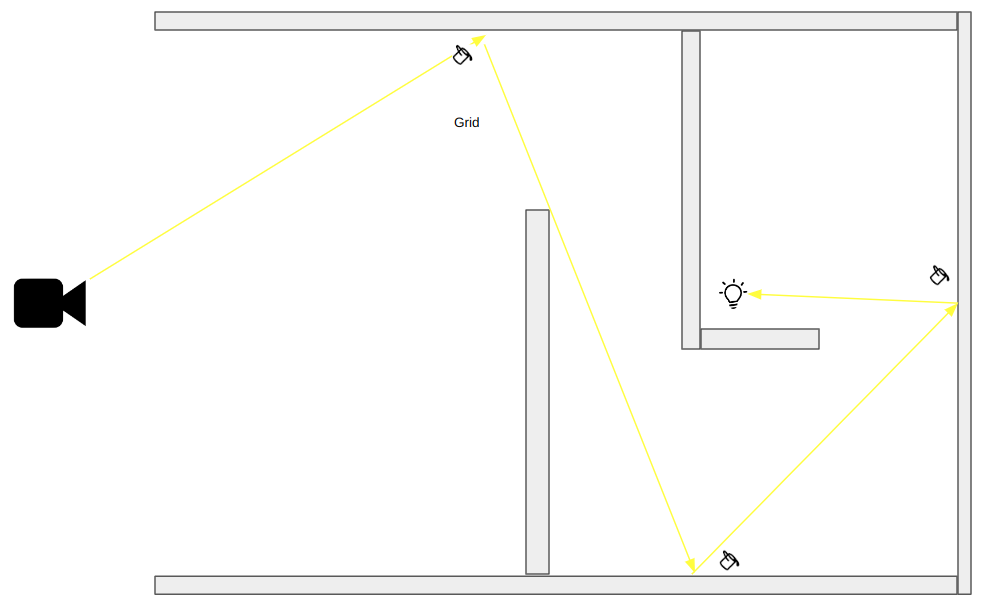

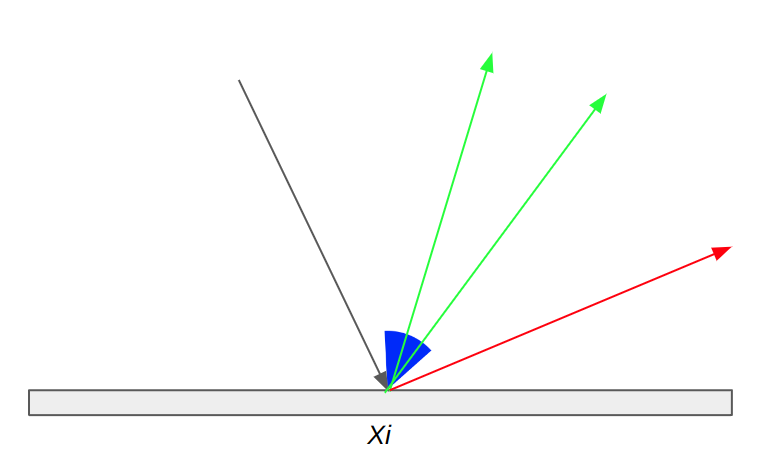

Let me illustrate how that would work on our case using the following scene:

The floor is a high specular(0% rough) dielectric material, trying to reconnect on the first bounce would yield a bunch of black pixels as the specular lobe is really narrow. So when doing resampling, instead of trying to reconnect, we replay the random numbers of the selected reservoir, then at the next vertex if the material is rough enough we reconnect.

In this particular when generating new paths, once the first bounce is reflected off the floor into the ceiling, we use our grid to find a better path, which in this case sends us straight down to the diffuse lobe of the floor that is illuminated by the lamp above it. Said path is shared when resampling and we get more stable samples.

My constant misuse of ReSTIR/ReGIR is getting out of hand, but fear not, I've got even more offensive uses for them.

Footnotes

[1] Ray Tracing Gems II. Chapter 14 "The Reference Path Tracer"

[2] The Rendering Equation, James Kajiya

[3] ReSTIR GI: Path Resampling for Real-Time Path Tracing

[4] Generalized Resampled Importance Sampling: Foundations of ReSTIR

[5] Ray Tracing Gems II, Chapter 23 "Rendering many lights with grid-based reservoirs"

[6] ReSTIR GI for Specular Bounces

[7] Spatiotemporal reservoir resampling for real-time ray tracing with dynamic direct lighting